ROS Group 产品服务

Product Service 开源代码库

Github 官网

Official website 技术交流

Technological exchanges 激光雷达

LIDAR ROS教程

ROS Tourials 深度学习

Deep Learning 机器视觉

Computer Vision

MIT's Modular Robotic Chain Is Whatever You Want It to Be

-

As sensors, computers, actuators, and batteries decrease in size and increase in efficiency, it becomes possible to make robots much smaller without sacrificing a whole lot of capability. There’s a lower limit on usefulness, however, if you’re making a robot that needs to interact with humans or human-scale objects. You can continue to leverage shrinking components if you make robots that are modular: in other words, big robots that are made up of lots of little robots.

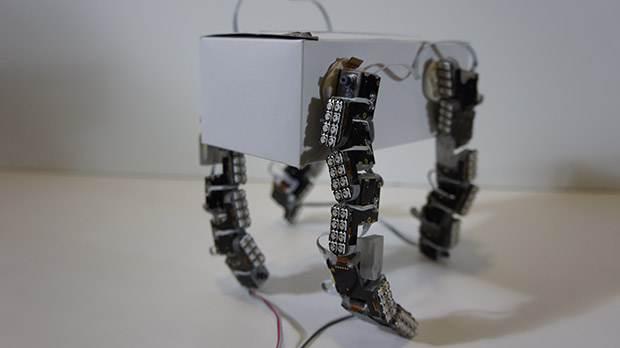

In some ways, it’s more complicated to do this, because if one robot is complicated, n robots tend to be complicatedn. If you can get all of the communication and coordination figured out, though, a modular system offers tons of advantages: robots that come in any size you want, any configuration you want, and that are exceptionally easy to repair and reconfigure on the fly.

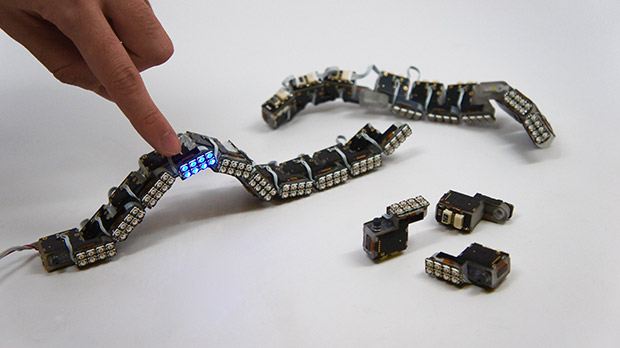

MIT’s ChainFORM is an interesting take on this idea: it’s an evolution of last year’s LineFORM multifunctional snake robot that introduces modularity to the system, letting you tear of a strip of exactly how much robot you need, and then reconfigure it to do all kinds of things.

MIT Media Lab calls ChainFORM a “shape changing interface,” because it comes from their Tangible Media Group, but if it came from a robotics group, it would be called a “poke-able modular snake robot with blinky lights.” Each ChainFORM module includes touch detection on multiple surfaces, angular detection, blinky lights, and motor actuation via a single servo motor. The trickiest bit is the communication architecture: MIT had to invent something that can automatically determine how many modules there are, and how the modules are connected to each other, while preserving the capability for real-time input and output. Since the relative position and orientation of each module is known at all times, you can do cool things like make a dynamically reconfigurable display that will continue to function (or adaptively change its function) even as you change the shape of the modules.

ChainFORM is not totally modular, in the sense that each module is not completely self-contained at this point: it’s tethered for power, and for overall control there’s a master board that interfaces with a computer over USB. The power tether also imposes a limit on the total number of modules that you can use at once because of the resistance of the connectors: no more than 32, unless you also connect power from the other end. The modules are still powerful, though: each can exert 0.8 kg/cm of torque, which is enough to move small things. It won’t move your limbs, but you’ll feel it trying, which makes it effective for haptic feedback applications, and able to support (and move) much of its own weight.

If it looks like ChainFORM has a lot of potential for useful improvements, that’s because ChainFORM has a lot of potential for useful improvements, according to the people who are developing useful improvements for it. They want to put displays on every surface, and increase their resolution. They want more joint configurations for connecting different modules and a way to split modules into different branches. And they want the modules to be able to self-assemble, like many modular robots are already able to do. The researchers also discuss things like adding different kinds of sensor modules and actuator modules, which would certainly increase the capability of the system as a whole without increasing the complexity of individual modules, but it would also make ChainFORM into more of a system of modules, which is (in my opinion) a bit less uniquely elegant than what ChainFORM is now.

“ChainFORM: A Linear Integrated Modular Hardware System for Shape Changing Interfaces,” by Ken Nakagaki, Artem Dementyev, Sean Follmer, Joseph A. Paradiso, and Hiroshi Ishii from the MIT Media Lab and Stanford University was presented at UIST 2016.